Project Overview

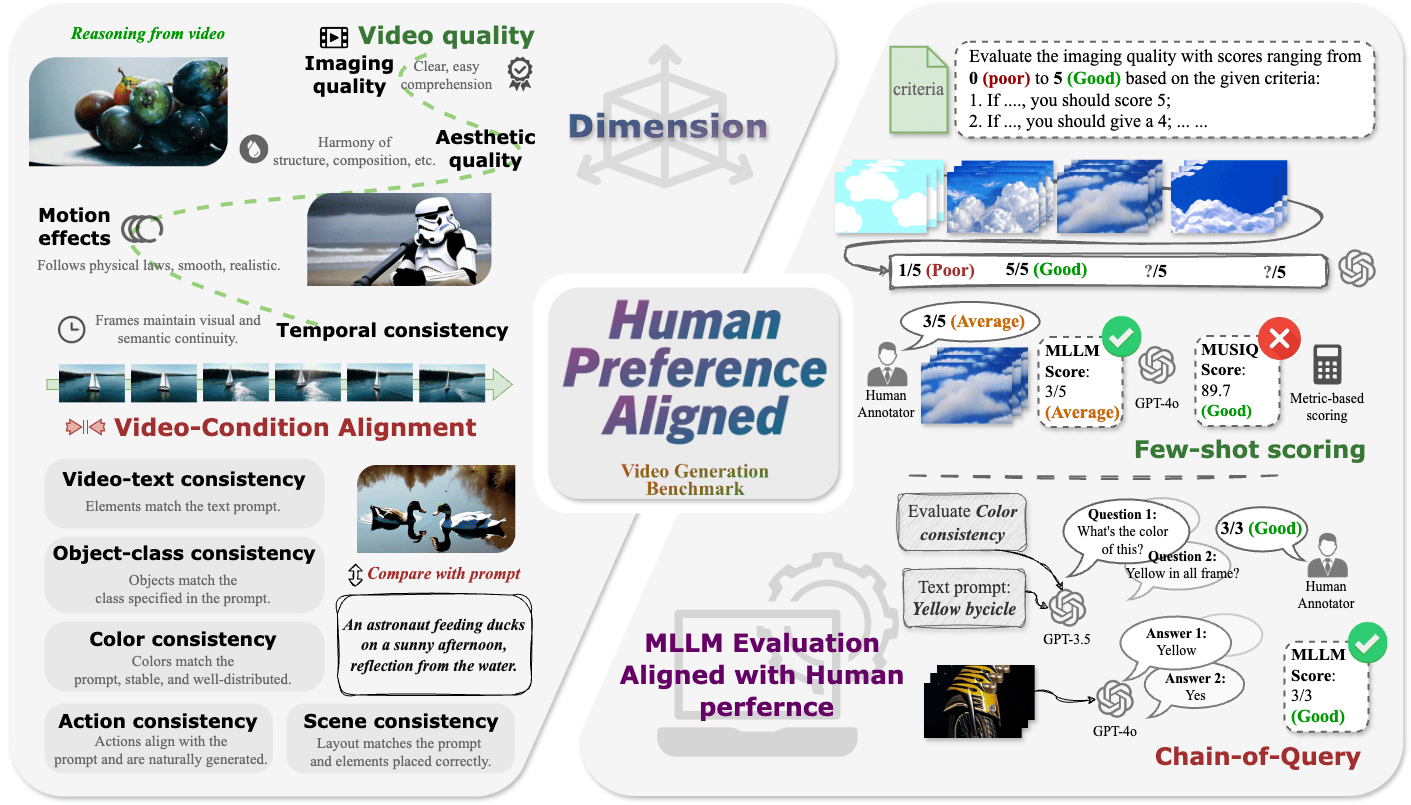

Video generation assessment is critical for ensuring generative models produce visually realistic, high-quality videos aligned with human expectations. Current video generation benchmarks are limited in aligning with human judgment. To address this, Video-Bench is introduced—a comprehensive benchmark incorporating large language models (LLMs) to evaluate video generation quality. The framework includes automated multimodal LLM evaluation, improving the alignment with human preferences. Experimental results show that Video-Bench significantly outperforms previous methods and provides more objective and accurate insights into generated video quality.

Main Results

Video-Bench Leaderboard

Higher scores indicate better performance. The best score in each dimension is highlighted in bold. ``Avg Rank" is the average rank of multiple dimensions, the lower the better.| Model | Video Quality | Video-Condition Alignment | Overall Rank |

|---|---|---|---|

| Gen3 | 4.66 | 4.38 | 1 |

| CogVideoX | 3.84 | 4.62 | 2 |

| VideoCrafter2 | 4.08 | 4.18 | 3 |

| Kling | 4.26 | 4.07 | 4 |

| Show-1 | 3.30 | 4.21 | 5 |

| LaVie | 3.00 | 3.71 | 6 |

| PiKa-Beta | 3.76 | 2.60 | 7 |

Human Preference Alignment Scores

This score is measured by Spearman's rank correlation coefficient. Higher score indicates better performance. The best score in each dimension is highlighted in bold. In practice, ComBench$^{*}$~\cite{sun2024t2v} is a reproduction version on our benchmark metrics.| Entities | Video Quality | Video-Condition Alignment | Average Score |

|---|---|---|---|

| HU - HU | 0.63 | 0.47 | 0.52 |

| HU - GPT | 0.51 | 0.47 | 0.41 |

| HU - HA | 0.61 | 0.50 | 0.50 |

Project Video Demonstration

GitHub Repository

For more details, visit the official repository: Video-Bench GitHub Repository